Maryland Governor Wes Moore signs AI deals labeled "High Risk" by the state's own criteria with Anthropic and a Palantir competitor

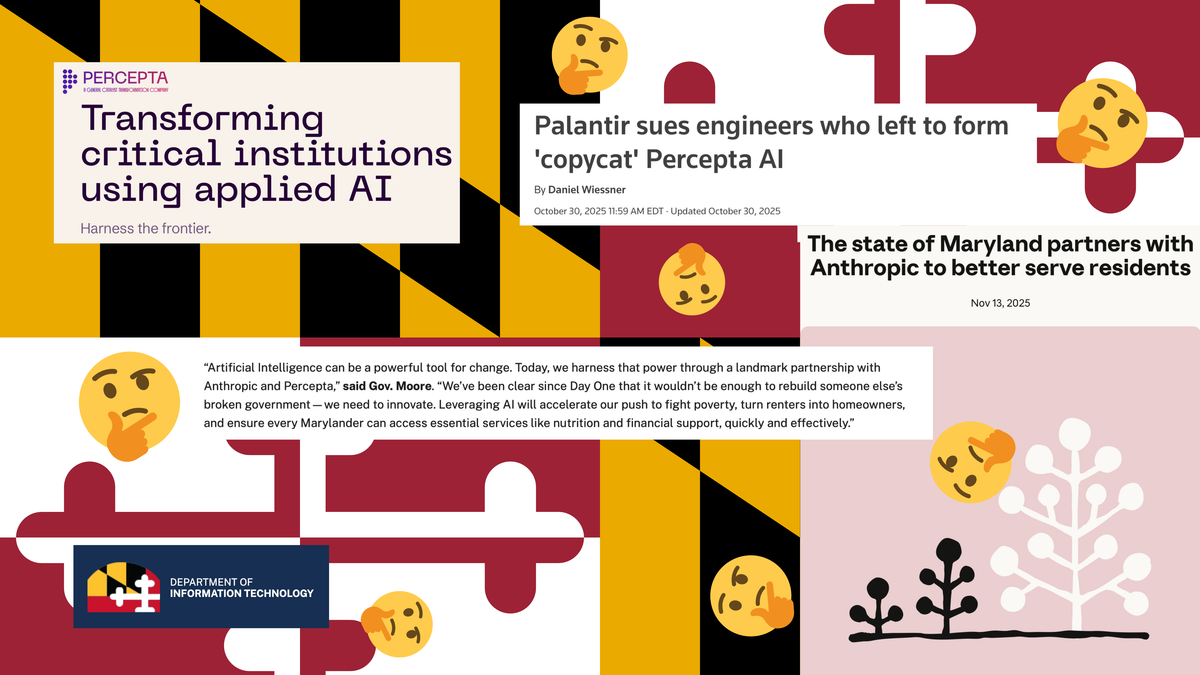

On November 13, Maryland governor Wes Moore announced a partnership between the state and Anthropic, the Amazon-sponsored creator of the Claude AI chatbot, to use its AI models for a number of government functions, including policy and benefits.

On November 13, Maryland governor Wes Moore announced a partnership[1] between the state and Anthropic, the Amazon-sponsored creator of the Claude AI chatbot, to use its AI models for a number of government functions, including policy and benefits. In the announcement page on Anthropic's website, the company claims that Claude will be used to "connect families with benefits," and "help residents apply for essential benefits" - benefits which include SNAP and Medicaid. They further claim that Claude will "more quickly and accurately verify" the 150,000 documents handled by caseworkers each month, "validate people's eligibility", and provide "policy guidance". It's unclear how the unresolved issue of chatbots like Claude frequently providing incorrect information will be dealt with when they're used for determining eligibility for state programs.

Governor Moore's AI Roadmap, released in January, declares that uses of AI in the delivery of health care and human services should have "robust AI risk management and governance". However, no details of the risk management practices that will be used by Anthropic as part of this partnership seem to have been released yet. Maryland's Responsible AI Policy, released in May, elaborates further on this requirement; defining four levels of risk from an AI system: ranging from "Minimal" to "Unacceptable."

Using Anthropic's Claude to determine benefits eligibility clearly falls under the "High Risk" category; the highest level of risk that doesn't involve directly violating the fundamental rights of citizens. AI systems in this category include, "systems that influence decisions on health, safety, law enforcement, eligibility for essential services, privacy, financial or legal rights, or other high-impact outcomes," requiring an AI Risk Assessment before they are used; as well as ongoing monitoring and risk mitigation measures. This risk assessment doesn't seem to exist for either of Maryland's deals, with Anthropic or Percepta; indicating that this work was not done before the State signed contracts with these companies.

Even more troubling, there doesn't appear to be a clear way to access any of Maryland's AI Risk Assessments; as the only Google results for these assessments appear to be the AI Policy itself, and its Implementation Guidelines. Those guidelines indicate that High Risk AI systems should have a"proof-of-concept," overseen by Maryland’s"Office of AI Enablement." Maryland's Department of IT hasn't put out a press release since October, casting doubt on whether the State was involved with the newly announced AI initiatives at all; This is especially true with regard to Percepta as the company's existence was only recently made public.

Additional areas of concern are Anthropic's ethics and the company's attitude towards society as a whole. The governor's AI Roadmap calls for more training programs designed around using AI in ways that, "enhance, rather than replace, human workers;". However, Anthropic's official position is that advanced AI systems are in fact not only going to replace human workers, they could even pose a risk to societal safety. In a recent interview with Anderson Cooper on CBS's 60 Minutes, Anthropic's CEO Dario Amodei predicted that AI will be "smarter than most or all humans," and admitted to being scared of "losing control" of their AI models. He elaborates further on his own website, presenting a vision of AI as a "country of geniuses in a data center" with models "smarter than a Nobel Prize winner" while comparing AI critics to anti-vaxxers.

Anthropic's employees have expressed similar thoughts about the future: Chief of Staff, Avital Balwit penned an article warning of a future where humans are "no longer the smartest and most capable entities" in the world. Another developer on Claude recently predicted that we're headed towards a future where, "software engineering is done," as early as 2026. Based on their own statements, Anthropic's own AI roadmap seems to be incompatible with the one released by governor Moore earlier this year.

In addition to the deal with Anthropic, Governor Moore also announced a deal between the state and an AI-powered data analysis company called Percepta[2]. It's unclear exactly how Percepta's technology works, but among their advertised features is a "90%+ time reduction for critical state permitting processes" while the company's recent reveal claims that it will "help governments streamline licensing and permitting processes with AI".

Percepta is a brand new company, having been first unveiled by its venture capitalist sponsor, General Catalyst, just six weeks before Wes Moore announced that Maryland would be working with them, raising questions about how the deal was negotiated with the state. Like with Anthropic, the nature of Percepta's business seems to indicate that it is also a high risk AI system that deserves special scrutiny under Maryland practices - something that doesn't seem possible with a company with no proven track record.

Complicating matters even more, Percepta was sued a few weeks ago by the former employer of its co-founder Radha Jain several of his peers: Palantir.

Palantir has become infamous as the face of big tech surveillance for reasons varying from creating a database that can be used to track all Americans, to racially biased algorithmic policing." In one 2021 incident, unauthorized FBI employees were able to access data on a criminal suspect using Palantir's services. CEO Alex Karp, who believes in the, "obvious, innate superiority" of Western countries, has explicitly described Palantir as having the aim to, "scare our enemies and, on occasion, kill them".

In its October 30th complaint against Percepta, the tech giant accuses two of Percepta's co-founders of violating non-compete agreements and stealing "highly confidential information to use at Percepta" in order to "build a Palantir copycat under the cover of darkness". As evidence, Palantir cites an interview with General Catalyst managing director Hermant Taneja, in which he says that he has been working on a "version" of Palantir's business model. The complaint further notes that a full half of Percepta's employees were poached from them, and argues that Percepta has shared their confidential information with potential customers, which could include Maryland.

If the court sides with Palantir and issues a preliminary injunction against Percepta's employees for violations of non-competition and non-solicitation agreements, it may affect their business relationship with Maryland. The ongoing drama between the two companies has not been mentioned by governor Moore.

- This partnership is supported by the massive philanthropic group Rockefeller Foundation, though they're unclear how much they've financially contributed to make it possible. ↩︎

- Not to be confused with an earlier AI startup called Percepta that produced anti-shoplifting surveillance technology and was acquired by commercial security company ADT Commercial (now called Everon). ↩︎