AI Doomsday Cult Primer

Whenever AI comes up, I often find it difficult to summarize how crazy the tech's most dedicated enthusiasts are without sounding like a conspiracy theorist. This led me to compiling this list of articles that show that worries of a coming "superintelligence" are closer to religion than science.

Whenever the topic of AI comes up, I often find it difficult to succinctly summarize how crazy the technology's most dedicated enthusiasts are without sounding like a conspiracy theorist. This led me to compiling this list of articles that, when viewed as a whole, clearly show that worries about a coming "superintelligent" AI is closer to an apocalyptic science fiction-based religion than a serious concern based in science. I've highlighted important excerpts from each piece that demonstrate the cult-like tendencies of AI enthusiast communities, their ties to libertarianism and racism, and their influence on major AI labs and the Donald Trump administration.

The Doomers

The three most popular labs developing large language models - OpenAI, Google DeepMind, and Anthropic - all have the stated goal of building a truly sentient AI, a concept dubbed "artificial general intelligence" (AGI). This is tied to a belief in a forthcoming event called "The Singularity" after which technology will become exponentially more powerful, fundamentally reshaping every aspect of humanity.

Tech oligarchs are gambling our future on a fantasy - Adam Becker for The Guardian describes how belief in AGI and the Singularity fuels decision making in Silicon Valley. The article notes that a recent survey of AI researchers shows that 76% of them believe that neural nets such as ChatGPT are "fundamentally unsuitable" for AGI. Despite this, belief that AGI is not just possible, but imminent is common among many powerful people in tech.

In the world of Silicon Valley CEOs and venture capitalists (and among credulous journalists and policymakers), there is a widespread belief that AGI is coming very soon, within a few years. This unquestioned faith in AGI is linked to a broader myth about the future of technology in general. Once AGI arrives, the story goes, it will quickly and inevitably become super-intelligent, far surpassing individual humans or even humanity as a whole in its capabilities. This will lead to an explosion in scientific and technological development that dwarfs the Industrial Revolution, known as the Singularity. The Singularity will reshape the lives of all humans, enabling seemingly magical results like easy space travel, immortality or near-immortality, perfect virtual reality and limitless energy, all within a few years or less – and the AI’s super-intelligent beneficence will render democracy obsolete.

What OpenAI Really Wants - This Wired article by Steven Levy delves into OpenAI's plans for AGI. As it turns out, their plans are to upend all of society, to the extent that their lawyers have accounted for it.

The company’s financial documents even stipulate a kind of exit contingency for when AI wipes away our whole economic system.

Levy goes on to describe the the vibe at OpenAI:

It’s not fair to call OpenAI a cult, but when I asked several of the company’s top brass if someone could comfortably work there if they didn’t believe AGI was truly coming—and that its arrival would mark one of the greatest moments in human history—most executives didn’t think so. Why would a nonbeliever want to work here? they wondered. The assumption is that the workforce—now at approximately 500, though it might have grown since you began reading this paragraph—has self-selected to include only the faithful. At the very least, as Altman puts it, once you get hired, it seems inevitable that you’ll be drawn into the spell.

I do think it's fair to call OpenAI a cult and I hope that the rest of the sources I've collected for this article demonstrates why.

What Ilya Sutskever Really Wants - Dr. Nirit Weiss-Blatt has covered this topic extensively on her AI Panic blog and provided additional commentary on the above Wired piece, describing her experience of going to a talk by OpenAI's then-head of research, Ilya Sutsekever.

(Wired's article) reminded me of what Ilya Sutskever said in May 2023 (at a public speaking event in Palo Alto, California). His entire talk was about how AGI “will turn every single aspect of life and of society upside down.” When asked about specific professions – book writers/ doctors/ judges/ developers/ therapists – and whether they are extinct in one year, five years, a decade, or never, Ilya Sutskever answered (after the developers’ example): "It will take, I think, quite some time for this job to really, like, disappear. But the other thing to note is that as the AI progresses, each one of these jobs will change. They'll be changing those jobs until the day will come when, indeed, they will all disappear."

When asked about AI’s role in “shaping democracy,” Ilya Sutskever answered: “If we put on our science fiction hat, and imagine that we solved all the, you know, the hard challenges with AI and we did this, whatever, we addressed the concerns, and now we say, ‘okay, what's the best form of government?’ Maybe it's some kind of a democratic government where the AI is, like, talks to everyone and gets everyone's opinion, and figures out how to do it in like a much more high bandwidth way.”

Dr. Weiss-Blatt details some other crazy things Ilya Sutskever has said regarding AGI:

On Twitter, he wrote that “it may be that today’s large neural networks are slightly conscious.” He later asked his followers, “What is the better overarching goal”: “deeply obedient ASI” [Artificial Superintelligence] or an ASI “that truly deeply loves humanity.” In a TIME magazine interview, in September 2023, he said: **"The upshot is, eventually, AI systems will become very, very, very capable and powerful. We will not be able to understand them. They’ll be much smarter than us. By that time, it is absolutely critical that the imprinting is very strong, so they feel toward us the way we feel toward our babies."

She summarizes Sutskever's talk as a "traumatizing" display of his apocalyptic AI ideology that "freaked the hell out of" professional AI researchers present.

Sam Altman's Manifest Destiny - Tad Friend penned a profile of OpenAI co-founder and CEO Sam Altman for The New Yorker. A shocking quote from Altman reveals the extent to which he's obsessed with the end of the world:

My problem is that when my friends get drunk they talk about the ways the world will end. After a Dutch lab modified the H5N1 bird-flu virus, five years ago, making it super contagious, the chance of a lethal synthetic virus being released in the next twenty years became, well, nonzero. The other most popular scenarios would be A.I. that attacks us and nations fighting with nukes over scarce resources.” The Shypmates looked grave. “I try not to think about it too much,” Altman said. “But I have guns, gold, potassium iodide, antibiotics, batteries, water, gas masks from the Israeli Defense Force, and a big patch of land in Big Sur I can fly to.

It's worth noting that this interview was conducted way back in 2016, well before ChatGPT even existed.

Inside the White-Hot Center of AI Doomerism (NYT) - In this article for the New York Times by Kevin Roose about the EA-aligned AI company Anthropic and the doomers that comprise the staff:

One Anthropic worker told me he routinely had trouble falling asleep because he was so worried about A.I. Another predicted, between bites of his lunch, that there was a 20 percent chance that a rogue A.I. would destroy humanity within the next decade.

Roose's article also decribes the company's close ties to the effective altruism movement, describing it as "a way for hyper-rational people to convince themselves that their values are objectively correct" that has "a strong presence in the Bay Area tech scene".

The Cult Aspects

The philosophy behind the ideologies of effective altruism and rationality are conducive to creating cult-like attitudes. Both groups purport to have created an optimal strategy for organizing all aspects of life, leading to an insular, elitist community with an interest in recruiting more members. Combined with a fear of imminent doom from AI and a belief that the rest of the world is ignoring the issue, the end result is a group that is shockingly similar to a stereotypical doomsday cult.

An interview with someone who left Effective Altruism - Math blogger Cathy O'Neil interviews an ex-follower about the cult-like elements of the effective altruism (EA) movement, including efforts to recruit impressionable college students and change their career paths. Over the course of the interview, the ex-follower uses the terms "cult" and "brainwashing" to describe the overall effect of buying into EA ideology.

Cathy: I mean, okay, like you just reminded me of my actual last question, which is, you said brainwashed. When we first met, you said cult. Can you riff on that a bit? What do you think is actually happening overall?

E: Yeah. And also I want to be fair and say, you know, I think that this doesn’t represent everyone who’s in EA. I have definitely met a good chunk of people who got into this in the first place for the right reasons, and who are still there for the right reason.

But I think there’s a really scary intentionality to get people involved in this movement, to not just get involved, but really hooked to them. Because the idea of EA is that it’s also a lifestyle. It’s not just a cultural movement or a way to the job market, but it is just about how you think about the world and how you spend your life, and where you donate, and what you need to do with your career, And even how you eat, whether you’re vegan or how you source your food, it’s all of those things. In order to involve people in a movement that’s so broad and that spans every aspect of your life and how you live it, you need to really get that philosophical buy-in for it, you need to sell people on it and get them to commit for life.

And so I think that it starts at the university level. And then people start there, and they completely get indoctrinated into it. And there’s a very intense intentionality behind that, a strategy behind how they’re convincing 19-year-olds to change their career path and what they want to do with their lives and how they think and all of it...

The interviewee describes how effective altruism can become an all-consuming ideology, preventing followers of the movement from enjoying life.

E: Another issue that I have with EA is that, in order to be effective, it’s important to do high impact work, but I also think in doing that you have to also be happy and have a fulfilled life. You know? Like, find some meaning in your life, so that also means things like building community and making art. And I think that, in this philosophical framework of impactfulness and effectiveness, there was no room for love or community or empathy or creativity. It was just like these are in the four or five genres where you can be the most impactful with your career. And if you’re not working one of those, you are not being impactful, or you aren’t making the most impact you could.

Cathy: It’s a weird cult in the sense of like, why AI? But when you put it together like you did with here’s how you should eat, here is what you work on, here how you should think, here’s what you shouldn’t think about, and then there’s like that intense recruitment and it’s not just like, try this out, it’s a lifelong commitment. It really adds up to something kind of spooky.

E: It does, yeah. And again, I think that there are incredible people that are involved with EA, but I sometimes think it is possible to pick out parts of EA that are really good and helpful and smart without buying into other parts of it. And I think that’s easier for adults who have come into it later in life, and harder for people who enter the movement as students and are kind of brainwashed from the get-go to zoom out and see it that way.

The Real-Life Consequences of Silicon Valley’s AI Obsession - This excellent investigation by Ellen Huet for Bloomberg is the most succinct summary of the cult aspects of Rationality and abuse within the community that I've read. This is one of the articles that shocked me so much that I needed to investigate the community more.

Several current and former members of the community say its dynamics can be “cult-like.” Some insiders call this level of AI-apocalypse zealotry a secular religion; one former rationalist calls it a church for atheists. It offers a higher moral purpose people can devote their lives to, and a fire-and-brimstone higher power that’s big on rapture. Within the group, there was an unspoken sense of being the chosen people smart enough to see the truth and save the world, of being “cosmically significant,” says Qiaochu Yuan, a former rationalist.

Yuan started hanging out with the rationalists in 2013 as a math Ph.D. candidate at the University of California at Berkeley. Once he started sincerely entertaining the idea that AI could wipe out humanity in 20 years, he dropped out of school, abandoned the idea of retirement planning, and drifted away from old friends who weren’t dedicating their every waking moment to averting global annihilation. “You can really manipulate people into doing all sorts of crazy stuff if you can convince them that this is how you can help prevent the end of the world,” he says. “Once you get into that frame, it really distorts your ability to care about anything else.”

As is typical with cults, several female effective altruists and Rationalists have described being victims of sexual abuse and harassment within their communities.

Within the subculture of rationalists, EAs and AI safety researchers, sexual harassment and abuse are distressingly common, according to interviews with eight women at all levels of the community. Many young, ambitious women described a similar trajectory: They were initially drawn in by the ideas, then became immersed in the social scene. Often that meant attending parties at EA or rationalist group houses or getting added to jargon-filled Facebook Messenger chat groups with hundreds of like-minded people.

The article goes into details of some abusive incidents in the community, including from a friend of Peter Thiel.

The Racism

The obsession of AGI believers with quantifying and increasing AI's intelligence leads to a tendency to embrace IQ as a real measurement. The Rationality community also has a broad interest in concepts deemed taboo by society, leading them towards racism. The result of this is that race science, eugenics, and "group differences in IQ" (the idea that non-white minority groups are inherently less intelligent) are deeply embedded within the culture of the community; concepts that have subsequently bled into commercial AI research conducted by those in the field sympathetic to Rationality.

Silicon Valley's Safe Space - A major exposé from Cade Metz in the New York Times delves deep into the history of the Rationality community, with a particular focus on two prominent writers: Rationality creator Eliezer Yudkowsky and Scott Alexander, a blogger whose work has been extremely influential in the community. The article delves into the community's enthusiasm for race science, its influence on major AI labs, and its ties to Peter Thiel and Elon Musk.

In 2005, Peter Thiel, the co-founder of PayPal and an early investor in Facebook, befriended Mr. Yudkowsky and gave money to MIRI. In 2010, at Mr. Thiel’s San Francisco townhouse, Mr. Yudkowsky introduced him to a pair of young researchers named Shane Legg and Demis Hassabis. That fall, with an investment from Mr. Thiel’s firm, the two created an A.I. lab called DeepMind.

Like the Rationalists, they believed that A.I could end up turning against humanity, and because they held this belief, they felt they were among the only ones who were prepared to build it in a safe way.

In 2014, Google bought DeepMind for $650 million. The next year, Elon Musk — who also worried A.I. could destroy the world and met his partner, Grimes, because they shared an interest in a Rationalist thought experiment — founded OpenAI as a DeepMind competitor. Both labs hired from the Rationalist community.

The NYT piece also delves into Alexander's persistent intermingling with race science and neoreactionaries (despite claiming to not be racist):

In one post, he aligned himself with Charles Murray, who proposed a link between race and I.Q. in “The Bell Curve.” In another, he pointed out that Mr. Murray believes Black people “are genetically less intelligent than white people.”

He denounced the neoreactionaries, the anti-democratic, often racist movement popularized by Curtis Yarvin. But he also gave them a platform. His “blog roll” — the blogs he endorsed — included the work of Nick Land, a British philosopher whose writings on race, genetics and intelligence have been embraced by white nationalists.

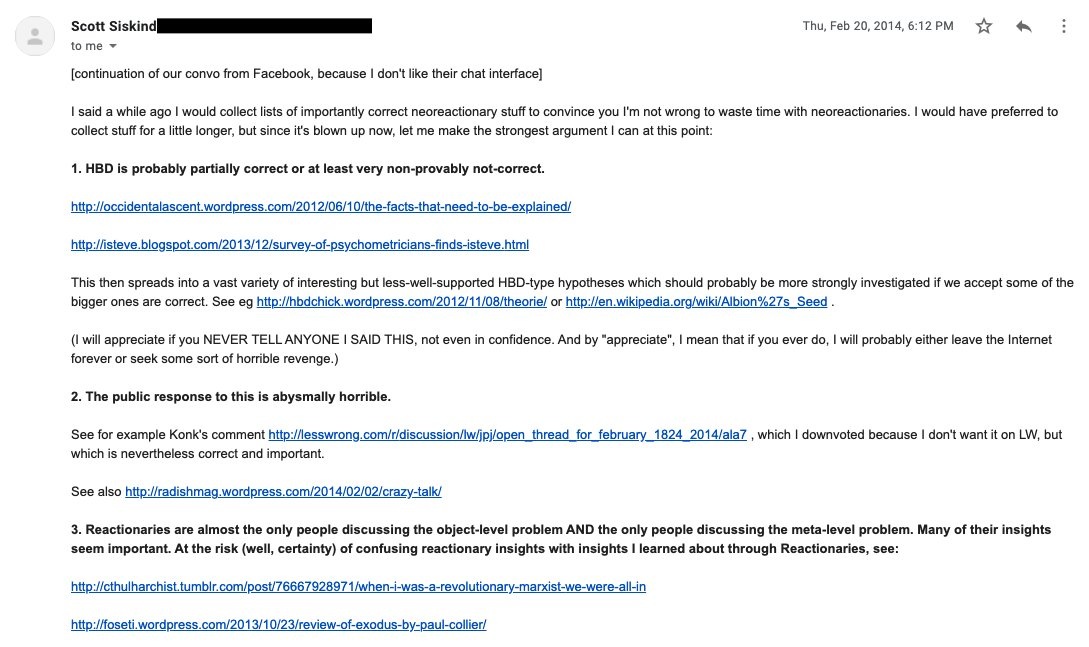

As it turns out, another thing penned by Alexander was an email he sent to another rationalist where he secretly expressed support for white supremacist "human biodiversity" theories, a position he knew would rightfully earn him ire if it was discovered.

Human Biodiversity - In a series of posts written David Thorstad for his Reflective Altruism blog explaining the connection between AI doomer communities, effective altruism, and race science. Some of Thorstad's posts focus on specific individuals such as Richard Hanania while others look into what ideas are broadly held by users of the de facto forums for Rationality and EA: LessWrong, founded by Rationality creator Eliezer Yudkowsky with the support of Peter Thiel and the official Effective Altruism forum that uses LessWrong's software.

In his post about LessWrong, Thorstad explores the reasons why Rationalists are attracted to these topics, explaining that they believe that "ideas outside the Overton window of allowable topics for discussion should nonetheless be discussed and seriously examined."

Speaking about LessWrong specifically, he details the community's enthusiasm to debate and embrace ideologies well outside the scientific mainstream:

A deeply held emphasis on serious engagement with unpalatable ideas leads many rationalists to hone in on those ideas. Those sympathetic to HBD put the point even more strongly. One commentator writes: "I think HBD is a fantastic test for true rationality. It’s a rare case where a scientific fact conflicts with deeply ingrained political and cultural values."

Thorstad also cites a game played at a LessWrong meetup in 2022 called "Overton Gymnastics", a "exercise in discomfort" in which participants are encouraged to share and analyze each other's most controversial beliefs.

Sam Bankman-Fried funded a group with racist ties. FTX wants its $5m back - Jason Wilson and Ali Winston write for The Guardian about ties between the Rationality/EA communities and race science vis-a-vis Sam Bankman-Fried's shuttered crypto exchange called "FTX" from which he embezzled billions of dollars.

Multiple events hosted at a historic former hotel in Berkeley, California, have brought together people from intellectual movements popular at the highest levels in Silicon Valley while platforming prominent people linked to scientific racism, the Guardian reveals.

But because of alleged financial ties between the non-profit that owns the building – Lightcone Infrastructure (Lightcone) – and jailed crypto mogul Sam Bankman-Fried, the administrators of FTX, Bankman-Fried’s failed crypto exchange, are demanding the return of almost $5m that new court filings allege were used to bankroll the purchase of the property.

One of the events the Guardian discovered was Manifest, a conference for enthusiasts of prediction markets that let you gamble on any event. I recently covered Manifest's 2025 conference and found it to have just as many race scientists as previous years.

The Wide Angle: Understanding TESCREAL — the Weird Ideologies Behind Silicon Valley’s Rightward Turn - Writing for The Washington Spectator, Dave Troy breaks down the TESCREAL acronym coined by Émile Torres and Dr. Timnit Gebru to describe the overlapping fantastical ideologies in the tech/AI space.

TESCREAL is a convergent Venn diagram of overlapping ideologies that, because they often attract contrarian young men, tend to co-occur with other male-dominated reactionary and misogynistic movements. The Men’s Rights movement (Manosphere), the MGTOW movement (Men Going Their Own Way), and PUA (Pick Up Artist) communities are near-adjacent to the TESCREAL milieu.

Combining complex ideologies into such a “bundle” might seem to be dangerously reductive. However, as information warfare increasingly seeks to bifurcate the world into Eurasian vs. Atlanticist spheres, traditionalist vs. “woke,” fiat vs. hard currency, it’s difficult not to see the TESCREAL ideologies as integral to the Eurasianist worldview.

The Political Ramifications

In accordance with their beliefs and with the financial support of a handful of sympathetic tech moguls, Rationalists and effective altruists have launched lobbying and PR campaigns aimed at slowing AI development they deem dangerous. This strategy has seem some success, with a handful of American and British politicians being convinced that existential risks from AGI are real.

Simultaneously, the Rationality-adjacent right-wing neoreaction (NRx) movement has come to be influential in parts of Donald Trump's administration. Elon Musk in particular has publicly expressed his belief in both a risk to humanity from AI as well as his many racist views. Also linking Rationality to the right are tech investor Peter Thiel, OpenAI CEO Sam Altman, and anti-democratic writer Curtis Yarvin.

How Silicon Valley doomers are shaping Rishi Sunak’s AI plans - Laurie Clarke writes Politico about the successful lobbying efforts of EAs to influence the UK's AI regulations in 2023.

The country's artificial intelligence white paper — unveiled in March — dealt with the “existential risks” of the fledgling tech in just four words: high impact, low probability.

Less than six months later, Prime Minister Rishi Sunak seems newly troubled by runaway AI. He has announced an international AI Safety Summit, referred to “existential risk” in speeches, and set up an AI safety taskforce with big global aspirations.

Helping to drive this shift in focus is a chorus of AI Cassandras associated with a controversial ideology popular in Silicon Valley. Known as "Effective Altruism," the movement was conceived in the ancient colleges of Oxford University, bankrolled by the Silicon Valley elite, and is increasingly influential on the U.K.’s positioning on AI.

One researcher for an effective altruism-affiliated group straightforwardly admitted that there was a concerted effort on the part of EAs to infiltrate the UK government.

The movement has been accruing political capital in the U.K. for some time, says Luke Kemp, a research affiliate with the Centre for the Study of Existential Risk who doesn’t identify as EA. “There’s definitely been a push to place people directly out of existential risk bodies into policymaking positions,” he says.

Inside the New Right, Where Peter Thiel Is Placing His Biggest Bets - This Vanity Fair piece by James Pogue links Peter Thiel to Blake Masters, Curtis Yarvin, and now-Vice President JD Vance.

Thiel has given more than $10 million to super PACs supporting the men’s candidacies, and both are personally close to him. Vance is a former employee of Thiel’s Mithril Capital, and Masters, until recently the COO of Thiel’s so-called “family office,” also ran the Thiel Foundation, which has become increasingly intertwined with this New Right ecosystem. These three—Thiel, Vance, Masters—are all friends with Curtis Yarvin, a 48-year-old ex-programmer and blogger who has done more than anyone to articulate the world historical critique and popularize the key terms of the New Right.

Yarvin's idea to “Retire All Government Employees” was given a mention. The Trump/Vance administration recently attempted to put RAGE into practice with the help of Elon Musk's "DOGE" initiative.

When he took questions at the end, they were mostly the usual ones about the supposedly stolen 2020 election—a view that Masters did not push back on—the border wall, vaccine mandates. One man raised his hand to ask how Masters planned to drain the swamp. He gave me a sly look. “Well, one of my friends has this acronym he calls RAGE,” he said. “Retire All Government Employees.” The crowd liked the sound of this and erupted in a cheer.

Curtis Yarvin’s Plot Against America Writing for The New Yorker, Ava Kaufman profiles Curtis Yarvin's background, his relationship with Thiel, and the Rationality community's flirtations with his writing.

Before long, links to Moldbug’s blog, “Unqualified Reservations,” were being passed around by libertarian techies, disgruntled bureaucrats, and self-styled rationalists—many of whom formed the shock troops of an online intellectual movement that came to be known as neo-reaction, or the Dark Enlightenment.

The most generous engagement with Yarvin’s ideas has come from bloggers associated with the rationalist movement, which prides itself on weighing evidence for even seemingly far-fetched claims.

Back in 2013, Yarvin once started a company called Tlon with Thiel's help. Even at the time, Thiel realized that associating with someone who openly advocates for overthrowing democracy would be problematic.

Tlon’s investors included the venture-capital firms Andreessen Horowitz and Founders Fund, the latter of which was started by the billionaire Peter Thiel. Both Thiel and Balaji Srinivasan, then a general partner at Andreessen Horowitz, had become friends with Yarvin after reading his blog, though e-mails shared with me revealed that neither was thrilled to be publicly associated with him at the time. “How dangerous is it that we are being linked?” Thiel wrote to Yarvin in 2014. “One reassuring thought: one of our hidden advantages is that these people”—social-justice warriors—“wouldn’t believe in a conspiracy if it hit them over the head (this is perhaps the best measure of the decline of the Left). Linkages make them sound really crazy, and they kinda know it.”

Sam Altman Is the Oppenheimer of Our Age Writing for New York Magazine, Elizabeth Weil profiles OpenAI's Sam Altman, including a focus on the increasingly visible reactionary and racist attitudes held by those in his tech circles.

“I definitely think there’s this tone of, like, ‘I told you so,’” another person close to Altman’s inner circle told me about the state of the industry. “Like, ‘You worried about all of this bullshit’” — this “bullshit” being diversity, equity, and inclusion — “and like, ‘Look where that got you.’” Tech layoffs, companies dying. This is not the result of DEI, but it’s a convenient excuse. The current attitude is: Woke culture peaked. “You don’t really need to pretend to care anymore.”

A Black entrepreneur — who, like almost everybody in tech I spoke to for this article, didn’t want to use their name for fear of Altman’s power — told me they spent 15 years trying to break into the white male tech club. They attended all the right schools, affiliated with all the right institutions. They made themselves shiny, successful, and rich. “I wouldn’t wish this on anybody,” they told me. “Elon and Peter and all of their pals in their little circle of drinking young men’s blood or whatever it is they do — who is going to force them to cut a tiny slice, any slice of the pie, and share when there’s really no need, no pressure? The system works fine for the people for whom it was intended to work.”

Putting It All Together

All of the craziness, racism, cult aspects, and science fiction of the Rationality community are perfectly exemplified in a 2015 Harper's article by Sam Frank called Come With Us If You Want to Live: Among the apocalyptic libertarians of Silicon Valley In it, Sam Frank narrates his experience of immersing himself amongst Rationalists, focusing on his visit to that year's Singularity Summit. It lays bare the degree to which people in this group believe that science fiction concepts will soon become real.

In June 2013, I attended the Global Future 2045 International Congress at Lincoln Center. The gathering’s theme was “Towards a New Strategy for Human Evolution.” It was being funded by a Russian new-money type who wanted to accelerate “the realization of cybernetic immortality”; its keynote would be delivered by Ray Kurzweil, Google’s director of engineering. Kurzweil had popularized the idea of the singularity. Circa 2045, he predicts, we will blend with our machines; we will upload our consciousnesses into them.

Also laid bare are the elitist attitudes often held by Rationalists, as laid bare by the community's founder Eliezer Yudkowsky.

Yudkowsky stopped me and said I might want to turn my recorder on again; he had a final thought. “We’re part of the continuation of the Enlightenment, the Old Enlightenment. This is the New Enlightenment,” he said. “Old project’s finished. We actually have science now, now we have the next part of the Enlightenment project.”

The workshop attendees put giant Post-its on the walls expressing the lessons they hoped to take with them. A blue one read RATIONALITY IS SYSTEMATIZED WINNING. Above it, in pink: THERE ARE OTHER PEOPLE WHO THINK LIKE ME. i am not alone.

Paired with these attitudes are outlandish libertarian political ideas, which were casually thrown out at the Summit. One suggested idea was hiring sex workers to satisfy the sex drives of mathematicians while another attendee claimed that no one has a right to housing and that programmers are the people who matter.

Sam Frank's article also introduces us to Blake Masters in the context of LessWrong Rationalists, a venture capitalist and protegé of Peter Thiel who would later run for office in Arizona with the support of JD Vance.

Some months later, I came across the Tumblr of Blake Masters, who was then a Stanford law student and tech entrepreneur in training. His motto — “Your mind is software. Program it. Your body is a shell. Change it. Death is a disease. Cure it. Extinction is approaching. Fight it.” — was taken from a science-fiction role-playing game. Masters was posting rough transcripts of Peter Thiel’s Stanford lectures on the founding of tech start-ups.

“I no longer believe that freedom and democracy are compatible,” Thiel wrote in 2009. Freedom might be possible, he imagined, in cyberspace, in outer space, or on high-seas homesteads, where individualists could escape the “terrible arc of the political.”

Blake Masters — the name was too perfect — had, obviously, dedicated himself to the command of self and universe. He did CrossFit and ate Bulletproof, a tech-world variant of the paleo diet. On his Tumblr’s About page, since rewritten, the anti-belief belief systems multiplied, hyperlinked to Wikipedia pages or to the confoundingly scholastic website Less Wrong: “Libertarian (and not convinced there’s irreconcilable fissure between deontological and consequentialist camps). Aspiring rationalist/Bayesian. Secularist/agnostic/ignostic . . . Hayekian. As important as what we know is what we don’t. Admittedly eccentric.”

The fact that so many people associated with the cult-like milieu that's developed in Silicon Valley (Thiel, Elon Musk, Vance, Masters, Altman) are now involved with not just AI regulation, but the ongoing destruction of American democracy should raise alarm bells for anyone concerned about the future of our planet.